The Privacy Challenges of Digital Contact Tracing

The Apple-Google Contact Tracing Protocol Is Not Completely Private

Over the past two weeks, Alice spent most of her time at home. However, she went to the grocery store a few times, she walked to the drug store, and picked up some food from her favorite takeout places. Today, Alice tests positive for COVID-19. How did she get sick, and who might she have infected in turn? To find out, health professionals turn to contact tracing: finding all the people who may have come into contact with someone who was infected, and urging them to get tested. While contact tracing is usually a manual and labor-intensive process, governments hope to use modern technology like smartphones to help them with this task, weighing a loss of privacy against keeping their citizens healthy. Apple and Google have jointly proposed a way to use smartphones for contact tracing while allowing users to maintain some privacy. However, this protocol comes with some key privacy tradeoffs.

Contact tracing has obvious privacy implications. China has created its own contact tracing app, which allows the government to track the names of everyone who has tested positive for the virus, as well as anyone those people may have had contact with. This level of state surveillance is not something people living in other countries would easily accept. Other countries are also developing their own apps including Germany, Australia, and Iceland. These apps mostly rely on local laws governing medical data to maintain user privacy. However, the data itself is quite sensitive and would be a choice prize for future attackers. For example, Anthem (a large US health insurance provider) was hacked in 2015 leading to the leak of 37.5 million health records. On the other side of the pond, the NHS was particularly impacted by the WannaCry cyber-attack because the NHS was relying on the out-of-date Windows XP operating system.

The solution jointly proposed by Apple and Google will make use of smartphones for contact tracing while collecting minimal data from users, thus hoping to strike a balance between users’ legitimate privacy concerns and the need to tackle the COVID-19 pandemic*.

How It Works

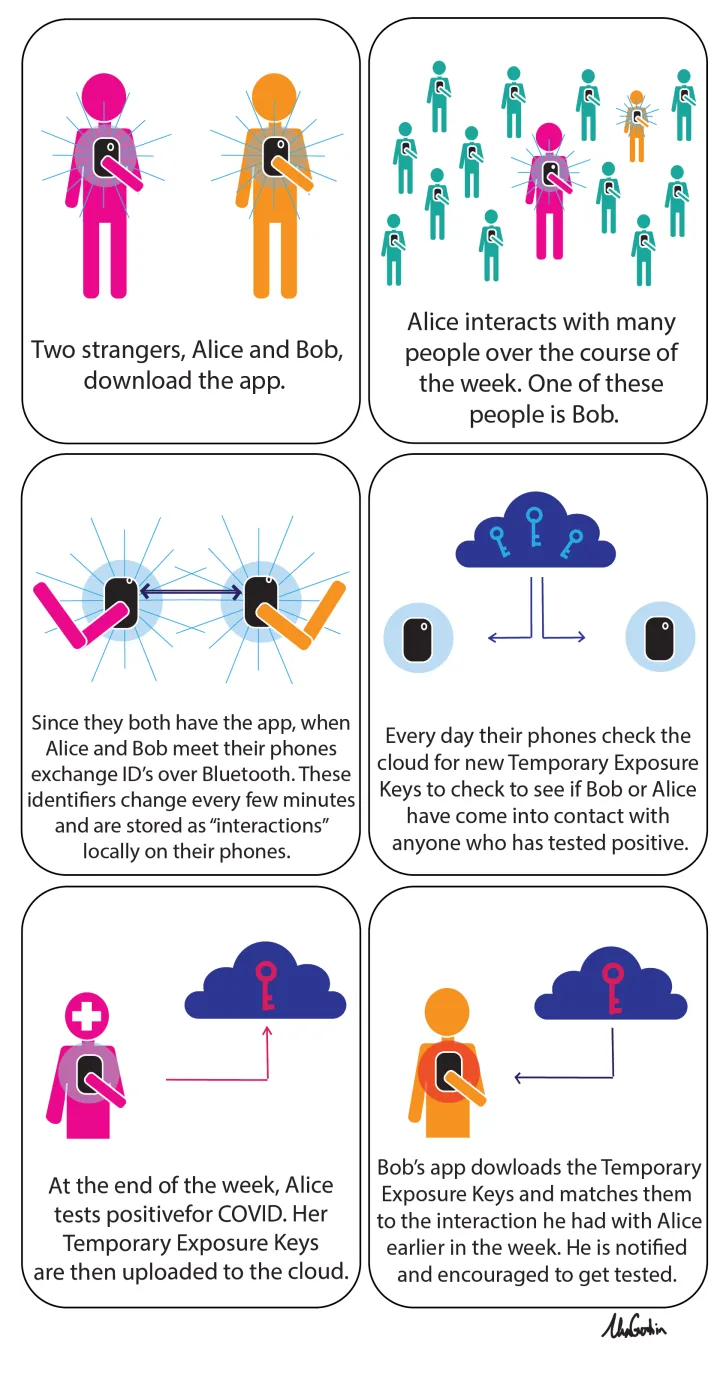

Consider Alice, who installs the app on her phone. After a week, she tests positive for COVID-19. This app would then notify everyone who has come into contact with Alice over the last 14 days. If Alice and Bob were in contact in the last several days, and Bob also has the app installed on his phone, he would receive a notification that he should get tested for COVID.

If Bob never tests positive, then using the app poses no privacy risk to Bob. When Alice tests positive, a key to her interactions (but not her identity or location) are uploaded to a central server. This gives Alice some anonymity but is not completely private. In essence, Alice is choosing to willingly give up some of her privacy in order warn/protect the people she may have been in contact with.

The protocol, ironically, works like a handshake: when two people meet, their phones exchange anonymous IDs over Bluetooth that change every few minutes. These identifiers are stored locally as “interactions” on the person’s phone. When Alice tests positive, a key to her interactions is uploaded to a central cloud. Bob’s phone checks the cloud every day for new keys from people who have tested positive. Those keys are then downloaded to his device, where Bob’s app can use the keys to search the list of interactions and check whether he has come into contact with any of those people. If so, he is notified and encouraged to also get tested.

It’s Not Completely Private

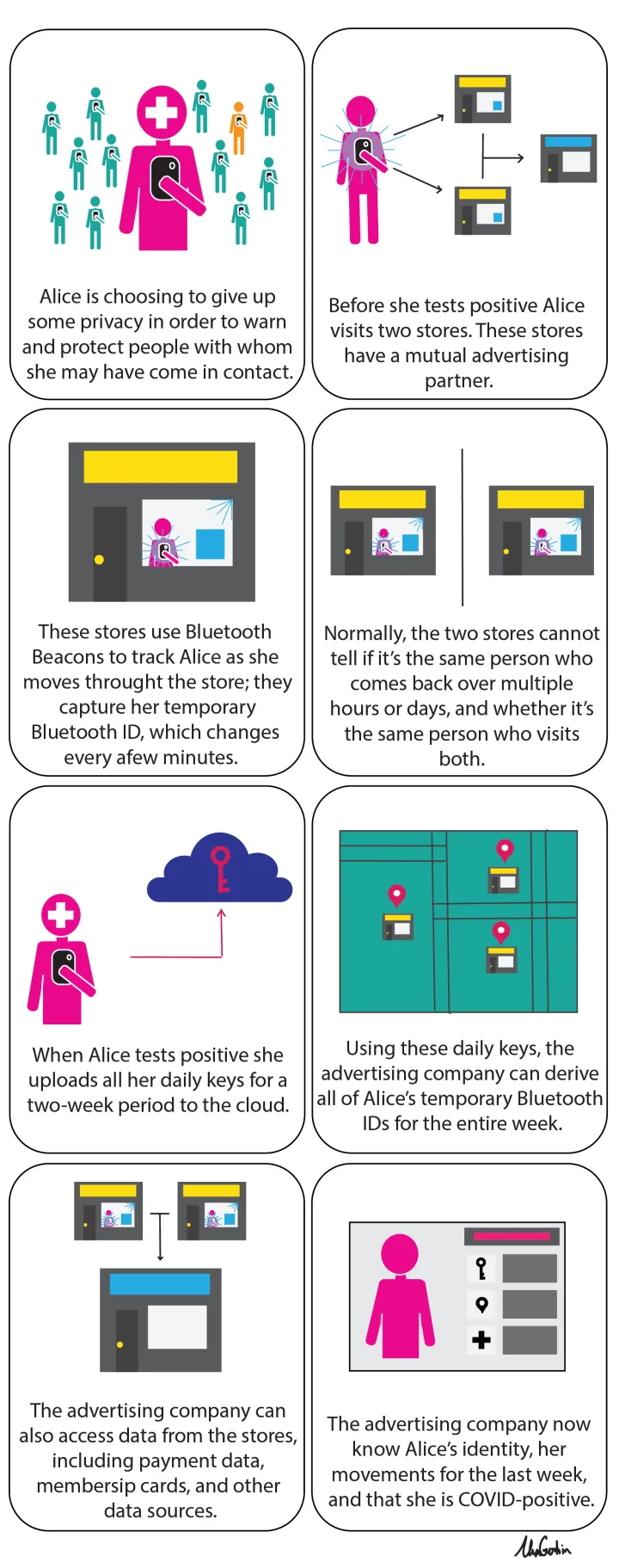

Unfortunately, this protocol is not completely private. Many stores use Bluetooth Beacons which track shoppers’ locations. These stores may also share the data that the Bluetooth Beacons collect with advertising companies, who can aggregate data from multiple stores.

Take Alice from the previous example. Throughout the week, Alice visits a variety of stores including C-Mart and D-Mart, which work with a common advertising partner Eve Corp. Both C-Mart and D-Mart use Bluetooth Beacons to track Alice as she moves throughout the store. The beacons capture her temporary Bluetooth ID, with changes every few minutes. Normally, C-Mart and D-Mart cannot track Alice between the stores or over different days, since Alice’s Bluetooth ID changes so regularly.

If Alice uses the Apple-Google protocol and tests positive, she uploads all her daily keys for the previous two-week period to the cloud. Using these daily keys, Eve Corp can derive all of Alice’s temporary Bluetooth IDs for the preceding week. Eve Corp can the use the data gathered by Bluetooth Beacons in C-Mart and D-Mart to correlate her movements for the week.

It would not be difficult to then determine who Alice is based on her payment data, membership card, or other data sources that the stores can use. The stores now have significant information about Alice: her movements for the last week, and that she is COVID-positive.

Finally, the Apple-Google protocol specifies that there should be some limitation on who can upload data on infected individuals into the system to prevent abuse. However, they leave it to local governments, who will create the apps, to ensure that only people who have tested positive can upload their data. Exactly how this is managed will be key to assessing the privacy implications of any contact tracing apps – as well as the ability to “poison” the system with false data.

For example, if Alice needs to provide a government ID to the app, then all sorts of issues arise around how that data is managed. What data will be sent to the government? Will the government learn that you have tested positive? Will third-party vendors be involved in processing the data? Many of the advantages of creating a private contact-tracing protocol are eroded if this data isn’t carefully handled. Prior incidents raise privacy concerns around whether some government systems are sufficiently secure to safeguard personal and medical data. For example, the US Office of Personnel Management had 21.5 million records stolen including 5.6 million fingerprints in 2015.

Can It Be Improved?

When Alice tests positive, the Apple-Google protocol uploads her Temporary Exposure Key, thus linking all her interactions. This allows advertisers to track Alice throughout the day across multiple stores and potentially de-anonymize her. The PACT protocol, developed by MIT and other academic groups, uploads the interactions individually, without the Temporary Exposure Key. Thus, the interactions are not linked in the database, which makes it harder for advertisers to track Alice. This comes at the cost of storing more data in the database, making it more expensive for governments to run the program. We believe this is a price worth paying to protect user privacy.

We believe that any contact-tracing protocol should also tackle the question of system abuse. A potential solution to this is discussed in the PEPP-PT specification, through the use of anonymous links – which separates identity checking from providing and uploading test results. Therefore, we would like to see either Apple and Google, or governments, directly address the question of who can upload data to the system, and what documentation they will need to do so.

The Dilemma

Of course, Apple and Google have to strike a balance between protecting users’ privacy and keeping them safe from COVID-19. Even with the current design, France’s government has said that the protocol is too privacy-preserving and does not meet its needs. On the other hand, once technology is built into phones, it is hard to control and reliably undo. Since Google and Apple are global companies, any functionality it provides for France’s government could also be used in more authoritarian countries for nefarious purposes (e.g., to track journalists and dissidents after the pandemic is over). Therefore, striking the right balance at this difficult time is of critical importance.

Footnotes

* Apple and Google have more recently revised their proposal with details supplied by the DP-3T protocol, but are missing other privacy-enhancements offered by DP-3T such as RPI spreading with k-of-n secret sharing

We encourage you to share your thoughts on your favorite social platform.