Analyzing Support Desk Data to Better Understand and Protect Users

A Field Study of Computer-Security Perceptions Using Anti-Virus Customer- Support Chats

How do users conceptualize computer-security problems?

Do users accurately diagnose security problems?

What are the most pressing security problems that users need help with?

All of these are important questions to answer in order to find out how to improve technology and to better protect users.

We set to answer these questions by analyzing problem descriptions that were received by NortonLifeLock's support team from customers who were requesting technical support. This data provides a unique opportunity to explore users' perceptions of computer security in the context of the problems that they face in practice.

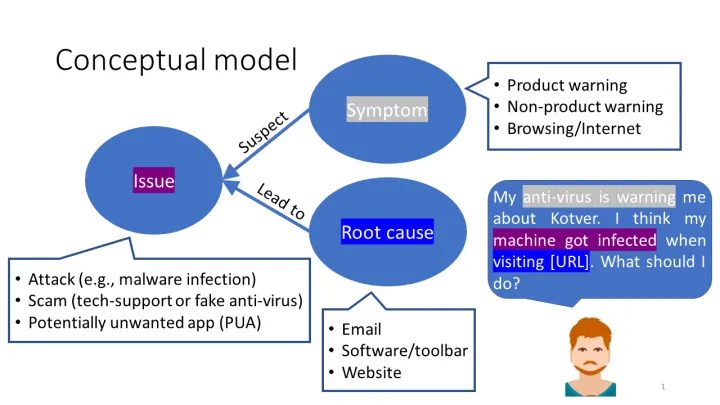

We analyzed a total of 189,272 problem descriptions that were submitted by users from 2015 to 2018 via a mixture of qualitative and quantitative methods. First, we manually analyzed 150 problem descriptions to find themes in the data and identify relationships between them. Our analysis allowed us to model how users conceptualize and diagnose security problems. In the model that we discovered, we found that users perceive a variety of symptoms that lead them to suspect security issues and potential root causes to these issues. For instance, a user might observe a warning from the Endpoint Protection software (symptom) that might lead them to suspect a malware infection (issue) which occurred when they downloaded an email attachment (root cause).

Using the themes we discovered, we labeled an additional 500 problem descriptions according to users' perceived symptoms and issues, as well as according to experts' diagnoses of the issues. This allowed us to measure the prevalence of symptoms and issues, and to quantify the agreement between users and experts. Among several findings, we discovered that attacks (e.g., malware infections), technical support scams, and potentially unwanted applications (PUAs) were the most prevalent issues that users encountered. Another noteworthy finding is that generally users' and experts' diagnoses agreed. For example, when users suspected scams and PUAs, experts were likely to diagnose the same issues. The main exception applies to attacks—users were often convinced by scammers that their computers were infected when they were not, and users often confused PUAs with actual malware infections.

Our findings point at several directions to help improve user security. For example, since technical support scams and PUAs are prevalent issues that users face, there is a pressing need to tailor technologies to protect users from these types of threats and nuisances. Moreover, our results uncovered several patterns in how users diagnose and report security issues that we could possibly leverage to better support users, potentially via automatic means. In fact, preliminary experiments that we performed using all 189,272 problem descriptions demonstrated the feasibility of automatically and accurately extracting users' perceived symptoms and issues and predicting experts' diagnoses. Such automation may enable us to enhance user support in a variety of ways, including but not limited to:

- assigning support requests to qualified agents who are trained to give support on certain topics (e.g., assigning attack-related cases to agents trained to remove malware);

- retrieving the most relevant FAQs and tutorials that can help users solve issues or can educate them about certain topics (e.g., technical support scam); and

- automatically targeting questions to ask users in order to help agents diagnose issues.

We hope that our findings will help inform future research and technology development.

The work presented above was published earlier this year in the ACM Conference on Human Factors in Computing Systems which was held in Glasgow, Scotland. Other authors on the paper include Kevin Roundy, Matteo Dell'Amico, Christopher Gates, and Daniel Kats from Norton Labs, and Lujo Bauer and Nicolas Christin from Carnegie Mellon University. The full paper can be found here.

We encourage you to share your thoughts on your favorite social platform.